Artificial Intelligence (AI) has transformed how we interact with technology, from chatbots like Grok to virtual assistants and content generators. But what makes these systems tick? At the core of many AI systems lies a powerful combination of transformers—a groundbreaking neural network architecture—and a complex training process that turns raw data into intelligent responses. In this article, we’ll dive into the mechanics of transformers and the training pipeline that powers modern AI, demystifying the tech behind the magic.

What Are Transformers?

Transformers are the backbone of most state-of-the-art large language models (LLMs) driving conversational AI. Introduced in the 2017 paper Attention Is All You Need by Vaswani et al., transformers revolutionized natural language processing (NLP) by offering a faster, more scalable way to process sequences of data, like words in a sentence, compared to older models like recurrent neural networks (RNNs).

The Mechanics of Transformers

Transformers excel at understanding and generating text due to their unique design. Here’s how they work:

- Input Representation: Text is broken into tokens (words or subwords) and converted into numerical vectors called embeddings. Positional encodings are added to capture word order, as transformers process all tokens simultaneously rather than sequentially.

- Attention Mechanism: The heart of the transformer is its attention system, which weighs the importance of different tokens in a sentence. For example, in “The cat sat on the mat,” the model focuses more on “cat” when processing “sat.” Multi-head attention runs multiple attention processes in parallel to capture diverse relationships (e.g., syntax vs. meaning).

- Encoder-Decoder Structure:

- Encoders process input to create a rich representation, using stacked layers of self-attention and feed-forward networks.

- Decoders generate output, like responses or translations, incorporating both the output so far and the encoded input.

- Feed-Forward and Normalization: Each layer includes a feed-forward neural network and normalization steps to stabilize training and improve performance.

This parallel processing makes transformers highly efficient, enabling them to handle long sequences and massive datasets, which is why they power models like GPT, BERT, and Grok.

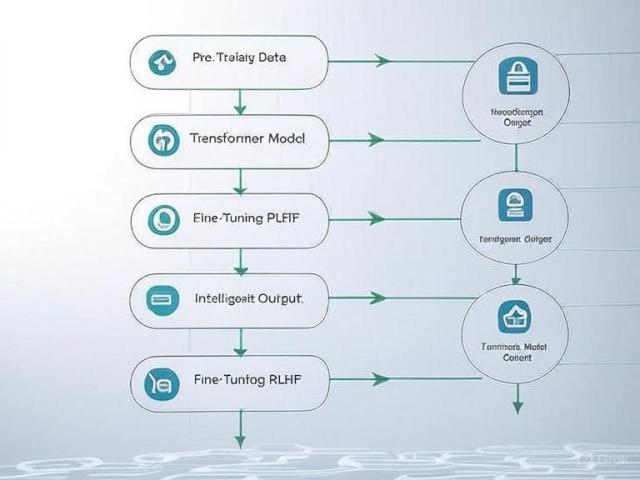

The Training Pipeline: From Data to Intelligence

Training an AI model like an LLM is a multi-stage process that transforms raw text into a system capable of human-like reasoning. It’s computationally intensive, requiring vast datasets and specialized hardware. Here’s a breakdown of the key phases:

1. Pre-Training: Building General Knowledge

Pre-training is where the model learns the structure of language. It’s like teaching a child to read by exposing them to millions of books.

- Objective: The model predicts missing or next words in a sentence. Common methods include:

- Masked Language Modeling (MLM): Randomly hide words and predict them (e.g., BERT).

- Next-Token Prediction: Predict the next word in a sequence (e.g., GPT-style models like Grok).

- Data: Billions to trillions of tokens from diverse sources—web pages, books, code repositories, and more. Data is curated to reduce noise or bias, though challenges like bias mitigation remain.

- Process: Text is fed in batches through the transformer. The model computes a loss (how wrong its predictions are) and adjusts its internal parameters using backpropagation and optimizers like AdamW.

- Scale: Training can take weeks on clusters of thousands of GPUs or TPUs, consuming massive computational resources (e.g., GPT-3 required ~10^23 floating-point operations).

2. Fine-Tuning: Sharpening the Edge

Pre-training gives the model general knowledge, but fine-tuning tailors it for specific tasks, like answering questions or following instructions.

- Supervised Fine-Tuning (SFT): The model trains on labeled datasets, such as question-answer pairs, to learn task-specific behavior.

- Reinforcement Learning from Human Feedback (RLHF): Human evaluators rank model outputs for quality, safety, or helpfulness. A reward model is trained on these rankings, and the LLM is optimized (using techniques like Proximal Policy Optimization) to maximize rewards.

- Parameter-Efficient Fine-Tuning (PEFT): Methods like LoRA fine-tune only a small subset of parameters, making the process faster and less resource-intensive.

3. Key Training Techniques

Training an LLM involves sophisticated techniques to ensure efficiency and performance:

- Batch Size and Learning Rate: Large batches (e.g., 1 million tokens) and dynamic learning rate schedules improve convergence.

- Regularization: Dropout and weight decay prevent overfitting, ensuring the model generalizes well.

- Distributed Training: Frameworks like PyTorch split data or model layers across multiple GPUs for parallel processing.

- Evaluation: Metrics like perplexity (how surprised the model is by correct text) guide training progress.

Beyond the Model: The Bigger Picture

While transformers and training are central, an AI system like Grok involves more:

- Interface: The chat window or app isn’t just a front-end; it processes inputs, formats outputs, and may trigger specialized modes (e.g., Grok’s DeepSearch for web queries).

- Memory Systems: These store conversation history for context-aware responses.

- External Tools: Integrations like web search or file analysis expand functionality beyond raw text generation.

- Moderation: Safety layers ensure responses are ethical and appropriate.

Why It Matters

Understanding transformers and training reveals the complexity behind AI’s seemingly effortless responses. Transformers enable models to grasp the nuances of language, while training imbues them with knowledge and adaptability. For tech enthusiasts visiting ReaperPCs.com, this insight highlights the computational power and ingenuity driving AI—something to appreciate whether you’re building a high-end PC or exploring AI tools.